report

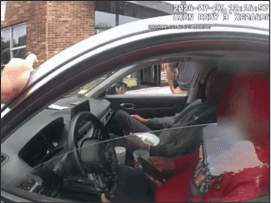

AI-powered video analysis that transforms body-cam footage into comprehensive, ready-to-file reports.redactions

Automated detection and redaction of faces, license plates, and sensitive content in video footage.

A multimodal stack compresses video and audio into aligned evidence, then constrains generation to what is verifiable.

Multimodal Encoding

Unifies video, audio, and metadata into a shared temporal embedding. Temporal features capture motion, speech cadence, and scene context at frame-level resolution.

Evidence Alignment

Links events to timestamps so outputs stay evidence-traceable. Event anchors are scored for confidence and traced back to source timestamps.

Narrative Decoding

Generates report-ready prose constrained by the evidence graph. Decoding stays grounded and emits citations for auditability.

Scientific, evidence-first model development

Proprietary models are trained on lawfully sourced, de-identified corpora and controlled simulations, prioritizing temporal alignment and evidence grounding.

Customer operational data is never used to train shared models.

Data sourcing

- Licensed, de-identified public safety datasets.

- Public-domain incident narratives and dispatch records.

- Controlled reenactments and synthetic augmentation.

Technique mix

- Self-supervised pretraining across motion, audio, and context.

- Active learning with expert review.

- Evidence-grounded decoding with continuous evaluation.

Evidence to narrative in three steps

Frames are linked into an evidence web, then processed on dedicated GPU servers for grounded output.

Frame Sampling

Dense timeline coverage.

Evidence Web

Keyframes decomposed into pixel tiles, then analyzed across frame subsets.

GPU Output

Inference on dedicated GPU servers.